Image generation moves fast. Though diffusion models used by popular tools like Midjourney and Stable Diffusion may seem like the best we have, the next thing is always coming, and OpenAI may have hit on it with “consistency models,” which can already do simple tasks an order of magnitude faster than DALL-E.

Last month, OpenAI released the paper as a preprint without the usual fanfare. This technical research paper is expected. However, this experimental technique yielded intriguing results.

Unlike diffusion models, consistency models make more sense.

In diffusion, a model gradually subtracts noise from a noise-only image to approach the target prompt. Today’s most impressive AI imagery relies on 10 to thousands of steps. That makes it expensive and too slow for real-time applications.

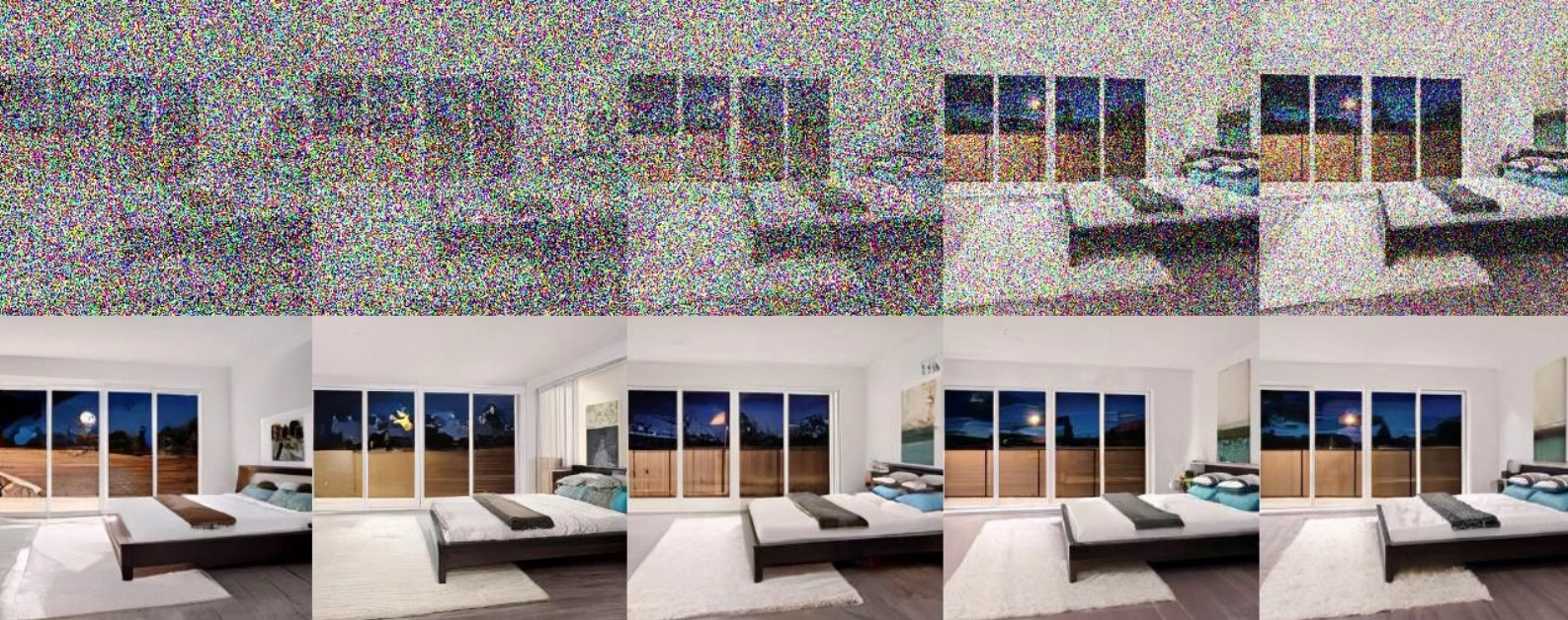

Consistency models should produce good results in one or two computation steps. The model is trained, like a diffusion model, to observe the image destruction process and generate a complete source image in one step from any level of obscuration.

But this is the most vague description of what’s happening. Paper like this:

Many of the images are barely good. But what matters is that they were generated in a single step rather than a hundred or a thousand. The consistency model also applies to colorizing, upscaling, sketch interpretation, infilling, and other tasks in one step (though often improved by a second).

First, in machine learning research, someone creates a technique, someone else improves it, and then others tune it and add computation to produce much better results. Modern diffusion models and ChatGPT came from that. Because you can only compute so much, this is self-limiting.

However, a new, more efficient method can do what the previous model did, albeit poorly at first. Though early, consistency models show this.

However, it shows that OpenAI, the most influential AI research organization in the world, is actively looking beyond diffusion at next-generation use cases.

Diffusion models can produce stunning results with 1,500 iterations in a minute or two using a GPU cluster. What if you want to run an image generator on a phone without draining its battery or provide ultra-fast results in a live chat interface? OpenAI’s researchers, including Ilya Sutskever, Yang Song, Prafulla Dhariwal, and Mark Chen, are actively searching for the right tool.

The research will determine whether consistency models are OpenAI’s next big step or just another arrow in its quiver—the future is likely multimodal and multi-model. I requested more information and will update this post if the researchers respond.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews