It’s true that large language models (LLMs) like OpenAI’s ChatGPT are black boxes. Data scientists struggle to understand why a model always responds the way it does, like they are inventing facts.

OpenAI is developing a tool to automatically identify which LLM parts cause which behaviors. As of this morning, the code to run it is open source on GitHub, but the engineers say it’s early.

“We’re trying to [develop ways to] anticipate what the problems with an AI system will be,” OpenAI interpretability team manager William Saunders told in a phone interview. “We want to trust the model and its answer.”

Ironically, OpenAI’s tool uses a language model to determine the functions of other, architecturally simpler LLMs, such as GPT-2.

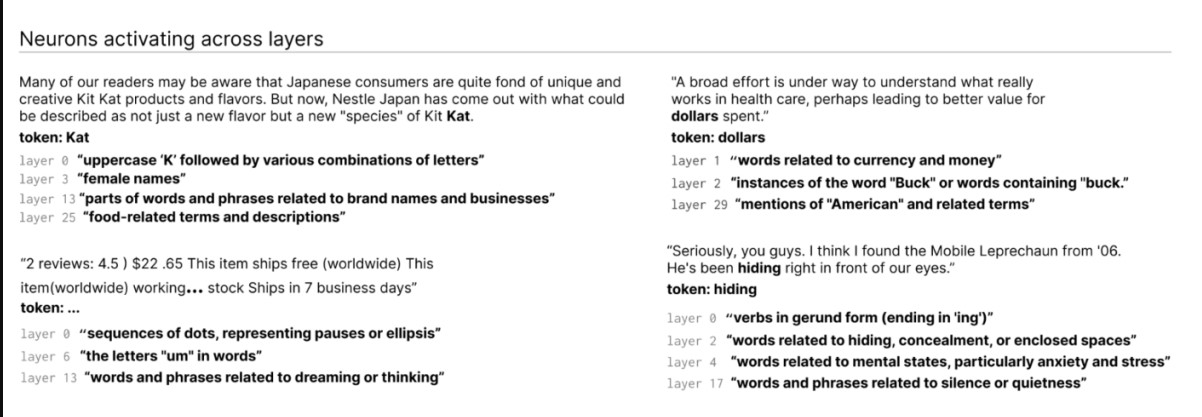

How? LLMs first. Like the brain, they’re made of “neurons” that observe text patterns to influence the model’s “saying.” A “Marvel superhero neuron” may increase the model’s likelihood of naming Marvel movie superheroes in response to a superhero prompt.

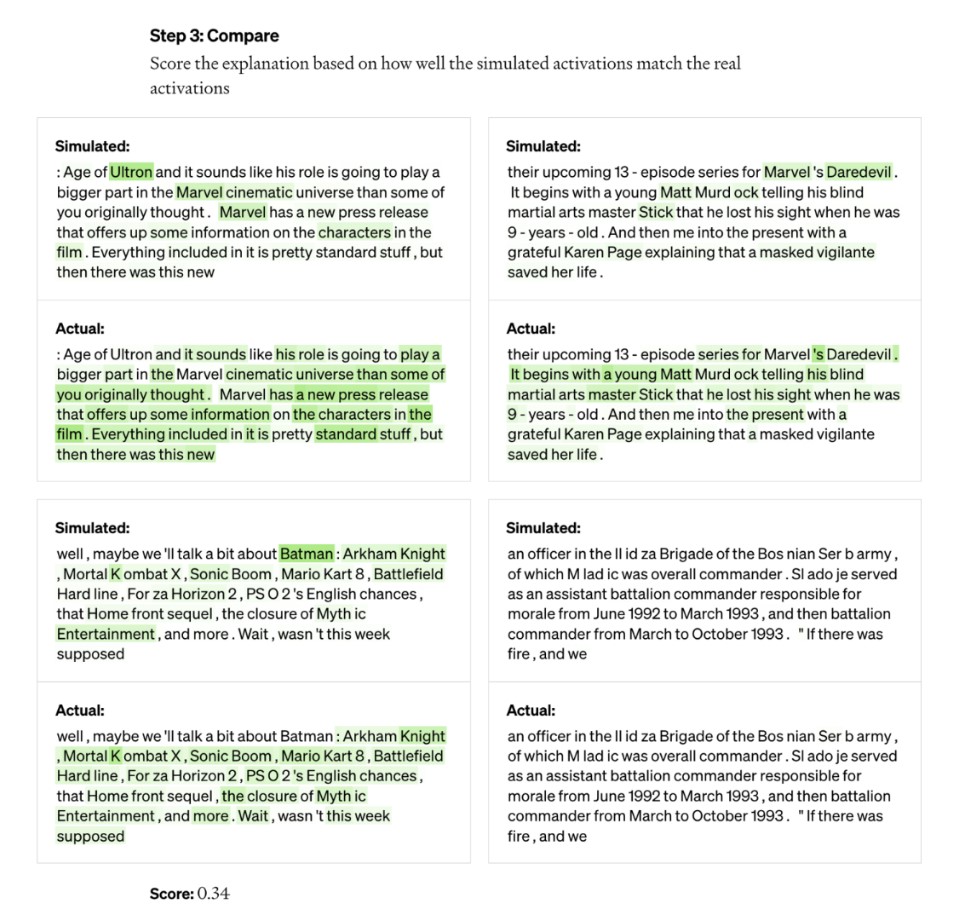

OpenAI uses this setup to disassemble models. First, the tool runs text sequences through the model and looks for cases where a neuron “activates” frequently. Next, it “shows” GPT-4, OpenAI’s latest text-generating AI model, these highly active neurons and has it explain them. The tool feeds GPT-4 text sequences to simulate neuron behavior to test the explanation. Then compares the simulated neuron to the real one.

“Using this methodology, we can basically, for every single neuron, come up with some kind of preliminary natural language explanation for what it’s doing and also have a score for how well that explanation matches the actual behavior,” said OpenAI’s scalable alignment team leader, Jeff Wu. “We’re using GPT-4 to produce explanations of what a neuron is looking for and score how well they match the reality of what it’s doing.”

The researchers explained all 307,200 GPT-2 neurons in a data set released with the tool code.

The researchers believe this technology could reduce bias and toxicity in LLMs. However, they acknowledge that it is far from useful. About 1,000 neurons were explained by the tool with confidence.

Since it requires GPT-4, a cynic might say the tool is an advertisement for GPT-4. DeepMind’s Tracr compiler, which converts programs into neural network models, doesn’t use commercial APIs.

Wu said that’s not true—the tool’s use of GPT-4 is “incidental” and shows GPT-4’s weaknesses in this area. It could also use LLMs other than GPT-4, he said.

Wu said most explanations score poorly or don’t explain the neuron’s behavior. “A lot of the neurons, for example, activate on five or six different things, but there’s no discernible pattern. GPT-4 sometimes misses patterns.

That doesn’t include more advanced, larger, or web-searching models. On the second point, Wu thinks web browsing wouldn’t change the tool’s mechanisms. He says it can be tweaked to determine why neurons use certain search engines or websites.

“We hope this will open up a promising avenue to address interpretability in an automated way that others can build on and contribute to,” Wu said. “The hope is that we actually have good explanations of not just what neurons are responding to but overall the behavior of these models—what kinds of circuits they’re computing and how certain neurons affect other neurons.”

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews