Google is aiming to create a significant impact with Gemini, its primary collection of generative AI models, applications, and services.

Gemini is a digital currency exchange platform. What are the ways in which it can be utilized? And how does it compare to the competition?

In order to facilitate staying informed about the most recent advancements in Gemini, we have compiled a convenient guide that will be regularly updated to include new Gemini models, features, and news regarding Google’s strategies for Gemini.

What is Gemini?

Gemini is a state-of-the-art model family called GenAI, which has been developed by Google’s AI research labs, DeepMind and Google Research. There are three different variations available:

- Gemini Ultra is the most high-performing model in the Gemini lineup

- Gemini Pro is a streamlined version of the Gemini model

- Gemini Nano is a compact version designed to operate on mobile devices such as the Pixel 8 Pro

All Gemini models have been trained to be proficient in multiple modalities, allowing them to effectively utilize and process information beyond mere textual data. They underwent extensive training on a wide range of audio, images, and videos, as well as a diverse collection of codebases and text in various languages.

Gemini distinguishes itself from models like Google’s LaMDA, which solely relied on text data for training. Gemini models, unlike LaMDA, have the ability to comprehend and produce various forms of content, not just text such as essays or email drafts.

What sets apart the Gemini apps from the Gemini models?

Google, once again demonstrating its branding challenges, initially failed to clearly communicate that Gemini is a separate entity from the Gemini apps on the web and mobile (formerly Bard). The Gemini apps serve as a user-friendly interface for accessing specific Gemini models. They can be likened to a client for Google’s GenAI.

By the way, the Gemini apps and models are completely separate from Imagen 2, which is Google’s text-to-image model that can be found in certain developer tools and environments.

What are the capabilities of Gemini?

With the Gemini models being multimodal, they have the potential to handle various tasks that involve multiple modes, such as transcribing speech, captioning images and videos, and even generating artwork. Some of these capabilities have already reached the product stage (more on that later), and Google is promising to deliver all of them — and even more — in the near future.

Naturally, it can be challenging to fully trust the company’s claims.

Google’s initial Bard launch fell short of expectations. And more recently, it caused controversy with a video that claimed to demonstrate Gemini’s capabilities. However, it was later revealed that the video had been extensively edited and was more of an idealized representation.

Assuming Google is providing accurate information, let’s explore the capabilities of the various tiers of Gemini once they reach their full potential:

Gemini Ultra

According to Google, Gemini Ultra is capable of assisting with various tasks, such as tackling physics homework, providing step-by-step solutions on a worksheet, and identifying potential errors in completed answers.

According to Google, Gemini Ultra is capable of performing various tasks, including identifying scientific papers that are pertinent to a specific problem. It can extract information from these papers and even update a chart by generating the necessary formulas to recreate the chart with more up-to-date data.

Gemini Ultra has the capability to support image generation, as mentioned previously. However, the current version of the model does not include this capability in its productized form. This may be due to the complexity of the mechanism, which is more intricate than the image generation process used in apps like ChatGPT. Gemini generates images directly, without the need for an intermediary step like feeding prompts to an image generator (such as DALL-E 3 in the case of ChatGPT).

Gemini Ultra can be accessed as an API through Vertex AI, which is Google’s comprehensive AI developer platform, as well as AI Studio, a web-based tool designed for app and platform developers. It also powers the Gemini apps, but they are not available for free. To gain access to Gemini Ultra, users must subscribe to the Google One AI Premium Plan, which is priced at $20 per month according to Google.

With the AI Premium Plan, Gemini seamlessly integrates with your Google Workspace account, allowing you to access your emails in Gmail, documents in Docs, presentations in Sheets, and Google Meet recordings. That can come in handy when you need to summarize emails or have Gemini take notes during a video call.

Gemini Pro

According to Google, Gemini Pro surpasses LaMDA in terms of its reasoning, planning, and understanding abilities.

According to a study conducted by researchers from Carnegie Mellon and BerriAI, the initial version of Gemini Pro outperformed OpenAI’s GPT-3.5 in handling longer and more complex reasoning chains. However, it was observed that this version of Gemini Pro, similar to other large language models, faced difficulties when it came to solving mathematics problems with multiple digits. Users also discovered instances of flawed reasoning and clear errors.

Google offered solutions, however, the initial remedy came in the shape of Gemini 1.5 Pro.

Gemini 1.5 Pro is enhanced in several aspects compared to its predecessor, particularly in its increased data processing capabilities. It is designed to seamlessly replace the previous version. Gemini 1.5 Pro has an impressive capacity, capable of processing approximately 700,000 words or around 30,000 lines of code. This is a significant improvement compared to the previous version, Gemini 1.0 Pro, which could only handle a fraction of that amount. Additionally, the model is capable of processing various types of data, not just text. Gemini 1.5 Pro has the capability to analyze a significant amount of audio or video content, even though it may take some time to do so. For instance, searching for a specific scene in a one-hour video can take approximately 30 seconds to a minute of processing.

Gemini 1.5 Pro was made available for public preview on Vertex AI in April.

Another endpoint, Gemini Pro Vision, has the capability to analyze text and images, such as photos and videos, and generate text similar to OpenAI’s GPT-4 with Vision model.

Developers can tailor Gemini Pro within Vertex AI to specific contexts and use cases by employing a fine-tuning or “grounding” procedure. Gemini Pro has the capability to establish connections with external, third-party APIs in order to carry out specific actions.

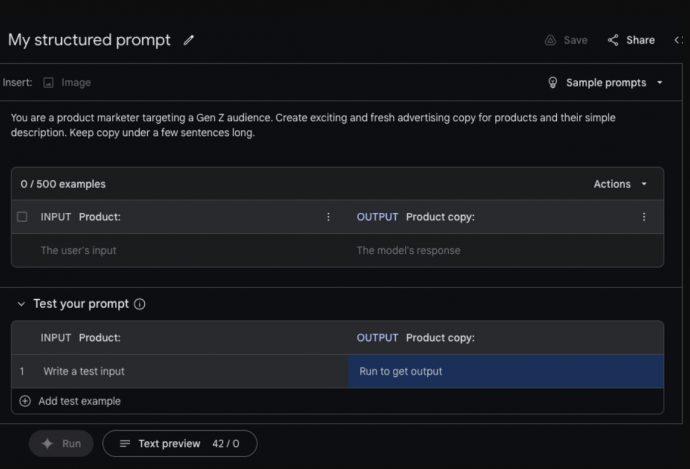

AI Studio offers workflows for constructing organized chat prompts using Gemini Pro. Developers are granted access to both the Gemini Pro and Gemini Pro Vision endpoints, enabling them to modify the model temperature to regulate the creative range of the output. They can also provide examples to give instructions on tone and style, as well as fine-tune the safety settings.

Gemini Nano

Gemini Nano is a compact iteration of the Gemini Pro and Ultra models, capable of operating directly on certain mobile devices rather than offloading the task to a remote server. Currently, it provides functionality for several features on the Pixel 8 Pro, Pixel 8, and Samsung Galaxy S24, such as the Summarize feature in Recorder and the Smart Reply feature in Gboard.

The Recorder app incorporates a Gemini-powered feature that provides a concise overview of your recorded conversations, interviews, presentations, and other audio snippets. Users can simply press a button to record and transcribe the audio. Users receive these summaries regardless of the availability of a signal or Wi-Fi connection, and no data is transmitted from their phone during this process to ensure privacy.

Gemini Nano is also available on Gboard, Google’s keyboard application. There, it enables a functionality known as Smart Reply, which assists in proposing the subsequent response you may want to make during a conversation in a messaging application. According to Google, the feature is currently limited to WhatsApp but will be expanded to include more apps in the future.

In the Google Messages app on compatible devices, Nano enables Magic Compose, a feature that allows users to create messages in various styles such as “excited,” “formal,” and “lyrical.”

Does Gemini surpass OpenAI’s GPT-4 in terms of performance?

Google has repeatedly emphasized the superiority of Gemini in terms of benchmarks. They assert that Gemini Ultra surpasses the current cutting-edge outcomes in “30 out of the 32 commonly employed academic benchmarks utilized in extensive language model research and development.” According to the company, Gemini 1.5 Pro is more proficient than Gemini Ultra in certain situations, particularly in tasks such as content summarization, brainstorming, and writing. It is expected that this superiority will be surpassed by the upcoming Ultra model.

However, disregarding the debate over whether benchmarks truly reflect a superior model, the scores that Google highlights seem to only show a slight improvement compared to OpenAI’s equivalent models. Furthermore, as previously stated, initial feedback on the older version of Gemini Pro has been unfavorable, with both users and academics noting its tendency to inaccurately present basic information, difficulties in translating content, and inadequate coding recommendations.

What is the price of Gemini?

The Gemini 1.5 Pro version is currently available for free usage in the Gemini apps, as well as in AI Studio and Vertex AI.

After Gemini 1.5 Pro finishes its preview phase in Vertex, the model will be priced at $0.0025 per character for input and $0.00005 per character for output. Vertex customers are charged based on a rate of 1,000 characters (equivalent to approximately 140 to 250 words) and, for models such as Gemini Pro Vision, they are also charged per image at a rate of $0.0025.

Assuming that a 500-word article consists of 2,000 characters. The cost of summarizing the article using Gemini 1.5 Pro is $5. Conversely, the production of an article with a comparable length would require an expenditure of $0.1.

The pricing for Ultra has not been announced yet.

Where can you try Gemini?

Gemini Pro

One can easily experience Gemini Pro by using the Gemini apps. Pro and Ultra are proficient in responding to inquiries in various languages.

Gemini Pro and Ultra can be accessed in preview in Vertex AI through an API. The API is currently available for free use, but there are certain limitations to keep in mind. It is designed to support specific regions, including Europe, and offers features such as chat functionality and filtering.

Additionally, AI Studio is where you can locate Gemini Pro and Ultra. With this service, developers have the ability to refine prompts and chatbots based on Gemini and obtain API keys for integration into their applications. Alternatively, they can export the code to a more comprehensive IDE.

Code Assist, Google’s suite of AI-powered assistance tools for code completion and generation, is now utilizing Gemini models. Developers have the ability to make significant changes to codebases, such as updating dependencies across multiple files and reviewing extensive sections of code.

Google has introduced Gemini models to its developer tools for Chrome and Firebase mobile development platform, as well as its database creation and management tools. It has introduced new security products supported by Gemini, such as Gemini in Threat Intelligence. This is a part of Google’s Mandiant cybersecurity platform that can analyze significant amounts of potentially harmful code. It also allows users to search for ongoing threats or signs of compromise using natural language queries.

Gemini Nano

Gemini Nano is available on the Pixel 8 Pro, Pixel 8, and Samsung Galaxy S24, with plans to expand to more devices in the future. Developers who are interested in integrating the model into their Android applications can register for an exclusive preview.

Will Gemini be available on the iPhone?

It could potentially! According to reports, Apple and Google are discussing the integration of Gemini into an upcoming iOS update, set to be released later this year. There is ongoing speculation that Apple is engaging in discussions with OpenAI and making efforts to enhance its own GenAI capabilities.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews