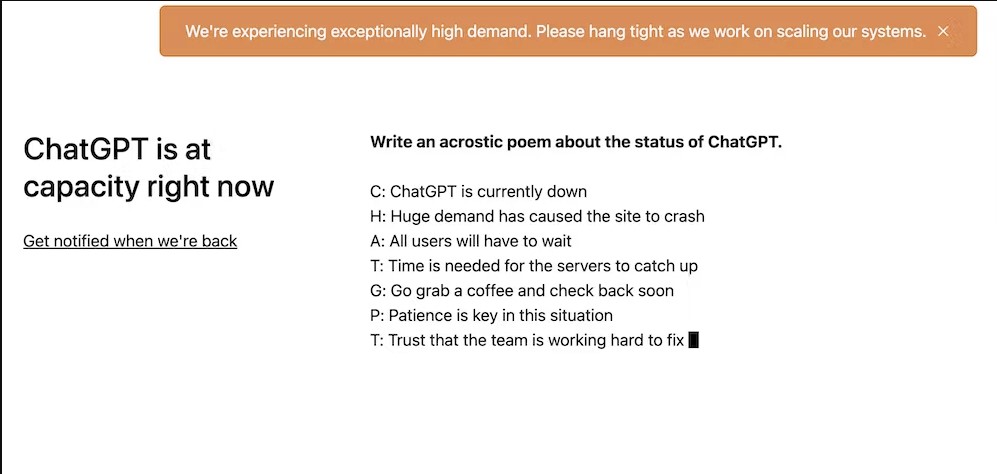

The most recent and impressive chatbot to date is called ChatGPT. After being released two weeks ago, it reached a million users in just five days. Because of how frequently it is utilized, its servers have frequently approached capacity.

Its creator, OpenAI, is already being mentioned as a potential Google competitor. Why use a search engine when ChatGPT can provide a comprehensive explanation in one paragraph? (There is also a Chrome addon that enables you to perform both concurrently.)

But what if we never discover the formula that gives ChatGPT its abilities?

The chatbot makes use of a variety of technical developments that have been reported in open scientific literature over the previous two decades. However, any original innovations are kept a secret. It’s possible that OpenAI is attempting to erect a technical and commercial moat as a barrier to entry.

What it is (and isn’t) capable of

ChatGPT is an excellent tool. Need a haiku about chatbots? Sure.

Chatbots are very smart.

perpetual processing

answers from nowhere.

What about a chatbot joke? No issue.

The chatbot crossed the street for what reason?

To cross your screen’s other side!

The capabilities of ChatGPT are vast. It can draft rental agreements or business letters, write homework essays, generate computer code according to user specifications, and even pass university exams.

What ChatGPT cannot achieve is equally significant. For instance, it has difficulty telling the difference between truth and lies. It is frequently a convincing liar as well.

What sea mammal moves the fastest?

The sailfish is the fastest marine mammal.

A mammal is not the sailfish.

The sailfish is not a mammal, you are right. I’m sorry for the error. The peregrine falcon is the fastest aquatic mammal.

Like autocomplete on your phone, ChatGPT works similarly. Your phone learns words from a dictionary to complete sentences. Because ChatGPT has been trained on almost the entire internet, it is capable of finishing entire sentences, if not entire paragraphs.

However, it only knows what words are most likely to be said next; it has no understanding of what it is saying.

Only by name, please

Peer-reviewed literature has previously accompanied AI advancements.

The BERT neural network, for instance, was created by the Google Brain team in 2018; the code was open-sourced, and the methodologies were published in peer-reviewed scientific papers. ChatGPT is likely to be built on this network as well.

AlphaFold 2, a program developed by DeepMind to predict protein folding, was named Science’s Breakthrough of the Year in 2021. The software and its findings were made available to the public so that researchers worldwide might utilize them to advance biology and medicine.

Following the launch of ChatGPT, we only have a brief blog post outlining its functionality. There is no indication that the code will be open-sourced or that there will be a companion scholarly article.

You need to know a little bit about the firm that created ChatGPT in order to comprehend why it might be kept a secret.

One of the strangest businesses to come out of Silicon Valley is probably OpenAI. In order to advance and create “friendly” AI in a way that “benefits humanity as a whole,” it was founded as a non-profit in 2015. Elon Musk, Peter Thiel, and other influential tech people committed $1 billion to help it achieve its objectives.

They reasoned that we couldn’t rely on for-profit businesses to create increasingly intelligent AI that supported human prosperity. As a result, AI needs to be developed by a non-profit and in an open manner, as the name suggested.

In order to scale and compete with the tech giants, OpenAI changed its business model in 2019 to become a capped for-profit firm (with investors limited to a maximum return of 100 times their investment). Microsoft also invested $1 billion in the startup.

It appears that OpenAI’s initial plans for openness were thwarted by money.

Making money from users

Additionally, it appears that OpenAI is leveraging user feedback to screen out the fictitious responses ChatGPT generates.

In the beginning, according to its blog, OpenAI employed reinforcement learning in ChatGPT to derank fraudulent and/or problematic answers using an expensive manually assembled training set.

But the more than a million users of ChatGPT now appear to be fine-tuning the service. I suppose it would be too expensive to obtain this kind of human feedback in any other way.

With datasets restricted to a firm that seems to be open only in name and procedures that are not described in the scientific literature, we now face the possibility of a big advancement in AI.

As an AI researcher, I find the glorification of closed-source, proprietary models problematic. We should be emphasizing sharing and open-sourcing AI models, datasets and code, be it in conferences or in the press (4/4).

— Dr. Sasha Luccioni 💻🌎✨🤗 (@SashaMTL) December 9, 2022

Next where?

AI has advanced quickly over the last ten years in large part as a result of academia’ and industry’ shared openness. The majority of our available AI tools are open-sourced.

But that may be coming to an end in the drive to create more powerful AI. We might observe a slowdown in this field’s advancements if openness in AI declines. New monopolies could also emerge.

And if history is any indication, we know that bad behavior in tech spaces is sparked by a lack of transparency. So, before we praise (or criticize) ChatGPT, we need consider how it came to be in our hands.

If we’re not careful, the exact thing that seems to herald the dawn of AI’s golden age can also herald its demise.

The Discussion

Professor of AI at UNSW and head of a research group, UNSW Sydney, Toby Walsh

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews