Google today shared that its more recent “multisearch” feature would now be accessible to users worldwide on mobile devices, anywhere that Google Lens is already available, along with other A.I.-focused announcements. The search feature, which enables simultaneous text and image searches, was first unveiled in April of last year as a way to update Google search and better utilize the capabilities of smartphones. Over the coming months, “multisearch near me,” a variation of this that focuses searches on nearby businesses, as well as multisearch for the web and a new Lens feature for Android users, will all be made universally accessible.

A.I. technology known as Multitask Unified Model, or MUM, which can comprehend information in a variety of formats, including text, photos, and videos, powers multisearch, as previously described by Google. MUM can then make insights and connections between topics, concepts, and ideas. Google implemented MUM in its Google Lens visual search features so that users could annotate a visual search query with text.

“By introducing Lens, we redefined what it means to search. Since then, we’ve added Lens directly to the search bar, and we’re still adding new features like shopping and step-by-step homework assistance, according to Prabhakar Raghavan, SVP of Google’s Search, Assistant, Geo, Ads, Commerce, and Payments products, who was speaking at a press conference in Paris.

For instance, a user could use Google Search to find a picture of a shirt they like, then ask Lens where they can find the same pattern on a different type of clothing, like a skirt or socks. Or they could use their phone to point at a damaged part of their bike and type “how to fix” into Google Search. It may be easier for Google to process and comprehend search queries that it couldn’t previously handle or that would have been more challenging to enter using text alone with this combination of words and images.

The method is most useful when looking for clothing in stores that may come in different colors or styles but that you like. A photo of a piece of furniture, such as a dining set, could also be used to find coordinating items, such as a coffee table. According to Google, users in multisearch could also categorize and hone their results based on brand, color, and visual elements.

Last October, the feature was made available to users in the United States; in December, it was extended to users in India. According to Google, multisearch is currently accessible to all mobile users worldwide in all languages and nations where Lens is available.

According to Google, the “multisearch near me” variant will also soon grow.

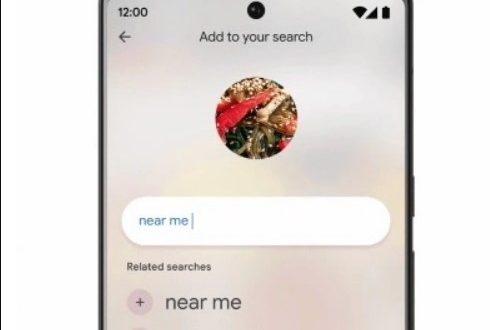

In May of last year, Google made the announcement that it might be able to route multisearch requests to nearby companies (also known as “multisearch near me”), returning search results of the items users were looking for that matched inventory at nearby stores or other businesses. For the bike with the broken part, for instance, you could add “near me” to a search query with a picture to locate a nearby bike shop or hardware store that had the replacement part you required.

Over the coming months, Google said, this feature will roll out to all languages and nations where Lens is available. In the upcoming months, it will also extend beyond mobile devices with support for multisearch on the web.

In terms of brand-new search products, the industry leader hinted at an upcoming Google Lens feature, saying that Android users would soon be able to search what they see in pictures and videos on their phone across apps and websites while still inside the app or website. This is referred to as “search your screen” by Google, and it will be accessible wherever Lens is made available.

Google also announced a new Google Lens milestone, noting that the tool is now used more than 10 billion times monthly.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews