After a 30-minute demo of the major features that are ready to test, I was convinced that Apple has delivered a true leapfrog in capability and execution of XR—or mixed reality—with its new Apple Vision Pro.

To clarify, I’m not saying it delivers on all promises, is a truly new paradigm in computing, or any other high-powered claim Apple hopes to deliver on once it ships. The device needs more time than a guided demo.

Since 2013’s Oculus DK1, I’ve used almost every major VR headset and AR device, including the latest Quest and Vive headsets. I’ve tried every XR fetch attempt. I’ve been awed and re-awed as developers of those devices’ hardware and software and their marquee apps have tried to solve the “conundrum of the killer app” and find a hit with the public.

Gorilla Tag, VRChat, and Cosmonius are social, narrative, and gaming successes. Sundance filmmakers’ first-person accounts of human (or animal) suffering have moved me.

None had Apple Vision Pro’s advantages. Namely, 5,000 patents filed in recent years and a massive talent and capital pool. It’s all Apple-level ambition. It may be the “next computing mode,” but you can see the conviction behind each choice. No shortcuts. Full engineering.

With 24 million pixels across the two panels, the hardware is great—orders of magnitude better than most headsets. The optics are better, the headband is comfortable and easily adjustable, and the top strap relieves weight. Apple is still deciding which light seal (cloth shroud) to ship with it, but I liked the default one. They ship them in different sizes and shapes to fit different faces. The power connector’s internal pin-type power linkages with external twist lock are also great.

Some optical adjustments for vision differences can be made magnetically. Eye-relief calibration is automatic during onboarding. No manual wheels.

Though large, the main frame and glass look good. Light but present.

If you’ve tried VR, you know that latency-induced nausea and isolation from wearing goggles are the biggest barriers.

Apple addressed both. The R1 chip, which sits next to the M2 chip, has a system-wide polling rate of 12ms and no judder or framedrops. The passthrough mode’s motion blur wasn’t distracting. Windows rendered sharply and moved quickly.

Apple’s new hardware solved those problems. New ideas, technologies, and implementations are everywhere. $3,500 puts the device in the power user category for early adopters.

Near-perfect gesture and eye tracking.

The headset detects hand gestures everywhere. On your lap or on a chair or couch. Many hand-tracking interfaces require you to hold your hands in front of you, which is tiring. Apple’s bottom cameras track your hands. A calibrated eye-tracking array highlights nearly everything you look at. A simple tap works.

Passthrough matters.

Long-term VR or AR wear requires a real-time 4k view of the world, including any people in your personal space. Most people feel uncomfortable if they can’t see for a while. Passing through an image should reduce that worry and increase use time. A clever “breakthrough” mechanism automatically passes a person near you through your content, alerting you to their approach. The outside eyes, which change appearance depending on what you’re doing, give context.

Resolution makes text readable.

If you can read text, Apple’s marketing of this as a full-fledged computer makes sense. All previous “virtual desktop” setups used panels and lenses too blurry to read fine text. It often hurt. Text is crisp and legible at all sizes and “distances” with the Apple Vision Pro.

My brief headset experience also included a few surprises. The samples were meticulously detailed, from the sharp display to the responsive interface.

Personas Play.

I doubted Apple could create a workable digital avatar from a Vision Pro headset scan of your face. Crushed doubt. If you’re measuring the digital version of you it creates to be your avatar in FaceTime calls and other areas, it has solid toes on the other side of the uncanny valley. It’s not perfect, but they got skin tension and muscle work right, they use machine learning models to interpolate a full range of facial contortions from your expressions, and the brief interactions I had with a live person on a call (I checked by asking off-script stuff) did not feel creepy or odd. It worked.

It’s fresh.

I’m repeating this, but it’s crisp. You reached the texture level in demos like the 3D dinosaur.

3D films are good.

Avatar: Way of Water on the Apple Vision Pro must have stunned Jim Cameron. This thing was born to make the 3D format sing, and it can display them almost immediately, so there will be a decent library of shot-on-3D movies that will breathe new life into them. Apple Vision Pro’s direct 3D photos and videos look great, but I haven’t tried them yet. Awkward? Not sure.

Easy installation.

You’re ready in minutes. Very Apple.

It looks great.

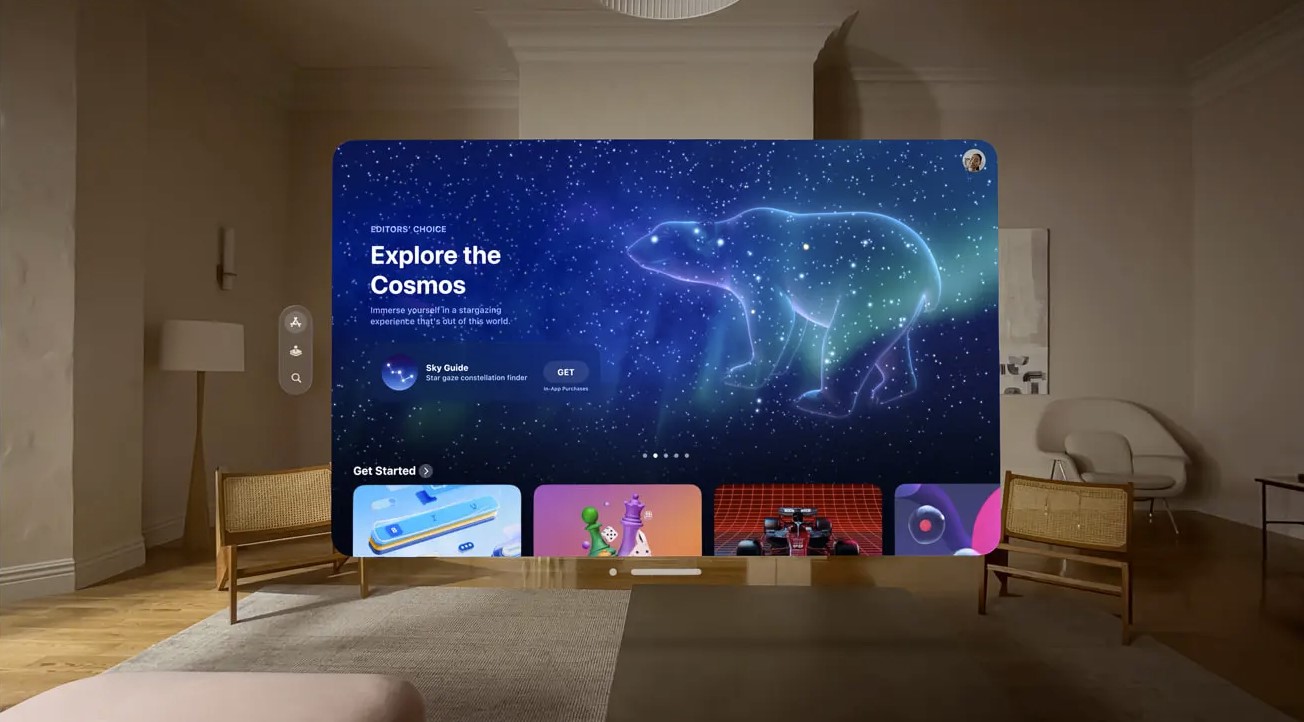

The interface and apps are so good that Apple used them directly from the device in its keynote. Because it interacts with other windows, casts shadows on the ground, and reacts to lighting, the interface is bright and bold.

I’m not sure Apple Vision Pro will fulfill Apple’s spatial computing claims. I haven’t had enough time with it, and Apple is still working on the light shroud and many software features.

It’s excellent, though.

XR headset perfection. We’ll watch developers and Apple’s progress and public reaction over the next few months.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews