Hollywood may be involved in AI labor disputes, but the technology has long used film and TV. Algorithmic and generative tools were featured in many SIGGRAPH LA talks and announcements. GPT-4 and Stable Diffusion may not fit yet, but the creative side of production is ready to embrace them if they can enhance artists rather than replace them.

Since SIGGRAPH has been a conference about computer graphics and visual effects for 50 years, the topics have overlapped more and more.

At afterparties and networking events, the strike was the first topic of conversation this year. Few presentations or talks addressed it. Even though SIGGRAPH is a conference for technical and creative minds, I got the vibe that “it sucks, but in the meantime we can continue to improve our craft.”

AI in production fears are not illusory, but misleading. Generational AI like image and text models have improved, raising concerns that they will replace writers and artists. Studio executives have unrealistic hopes of using AI to partially replace writers and actors. AI has performed important and artist-driven tasks in film and TV for a long time.

Numerous panels, technical paper presentations, and interviews showed this. AI’s history in VFX would be interesting, but here are some ways it was used in cutting-edge effects and production work.

Pixar artists use ML and simulations.

A pair of Pixar presentations about Elemental’s animation techniques was an early example. The characters in this movie are more abstract, and making a fire, water, or air person is difficult. Imagine manipulating the fractal complexity of these substances into a body that acts and expresses clearly while looking “real.”

Each animator and effects coordinator explained that procedural generation simulated and parameterized the flames, waves, and vapors of dozens of characters. It was too laborious and technical to hand-sculpt and animate every flame or cloud that wafts off a character.

The presentations showed that while they used sims and sophisticated material shaders to create the desired effects, the artistic team and process were deeply intertwined with engineering. They also worked with ETH Zurich researchers.

One example was Ember, a flame-like main character. It wasn’t enough to simulate flames, change colors, or adjust many dials to change the outcome. Flames had to look like the artist wanted, not just like they do in real life. To achieve this, they used “volumetric neural style transfer” (NST), a machine learning technique most have seen used to change a selfie to Edvard Munch’s style.

In this case, the team passed the raw voxels of the “pyro simulation,” or generated flames, through a style transfer network trained on an artist’s expression of a stylized, less simulated character flame. The voxels have the natural, unpredictable look of a simulation and the artist’s cast.

The animators are sensitive to the idea that they created the film using AI, which is false.

Paul Kanyuk of Pixar said, “If anyone ever tells you that Pixar used AI to make Elemental, that’s wrong,” during the presentation. “We shaped her silhouette edges with volumetric NST.”

(NST is a machine learning technique, but Kanyuk was making the point that it was used as a tool to achieve an artistic result, not “made with AI.”)

Later, other animation and design team members explained how they used procedural, generative, or style transfer tools to recolor a landscape to match an artist’s palette or mood board or fill city blocks with unique buildings mutated from “hero” hand-drawn ones. The main idea was that AI and AI-adjacent tools helped artists speed up tedious manual processes and better match the desired look.

Accelerating AI dialogue

Martine Bertrand, senior AI researcher at DNEG, which animated the stunning Nimona, said something similar. Look development and environment design are labor-intensive in many effects and production pipelines, he said. The DNSG presentation “Where Proceduralism Meets Performance” covers these topics.

“People don’t realize that there’s an enormous amount of time wasted in the creation process,” Bertrand said. Trying to find the right shot look with a director can take weeks, but poor communication often leads to weeks of wasted work. He added that AI can speed up exploratory and general processes like this, which is frustrating.

AI to multiply artists’ efforts “enables dialogue between creators and directors,” he said. Alien jungle, but this? Or like this? A mysterious cave like this? Or like this? Fast feedback is crucial for a creator-led, visually complex story like Nimona. A week wasted rendering a look the director rejects a week later is a serious production delay.

Sokrispy CEO Sam Wickert explained in one talk that pre-visualization is achieving new levels of collaboration and interactivity. His company pre-visied HBO’s “The Last of Us” outbreak scene, a complex “oner” in a car with many extras, camera movements, and effects.

In that more grounded scene, AI was limited, but improved voice synthesis, procedural environment generation, and other tools contributed to this increasingly tech-forward process.

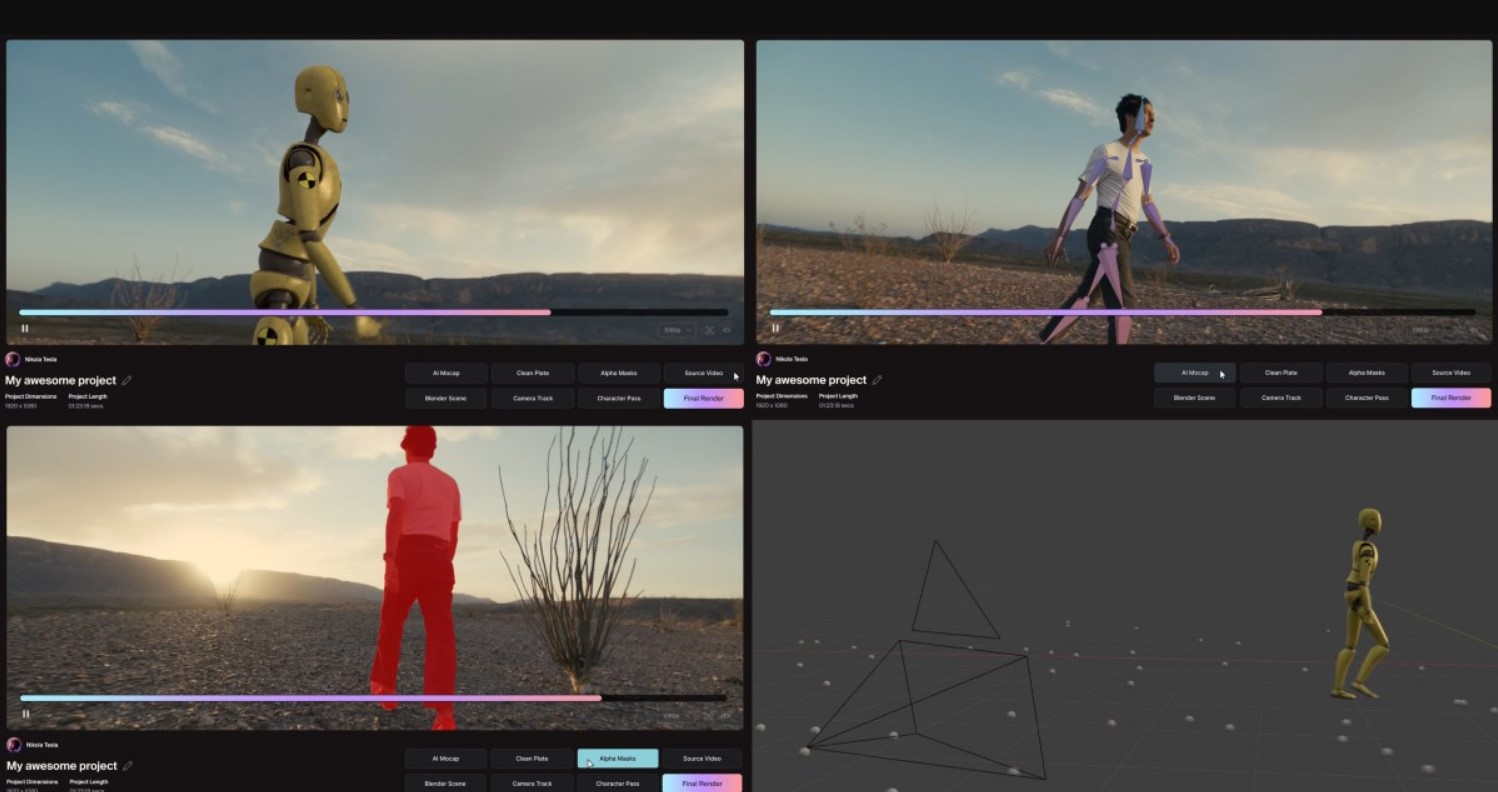

Wonder Dynamics, mentioned in several keynotes and presentations, is another artist-controlled machine learning production company. Scene and object recognition models now parse normal footage and replace human actors with 3D models in seconds, instead of weeks or months.

A few months ago, they told me they automate grueling rote (sometimes roto) labor with few creative decisions. “This doesn’t disrupt what they’re doing; it automates 80-90% of the objective VFX work and leaves them with the subjective work,” co-founder Nikola Todorovic said. I met him and his co-founder, actor Tye Sheridan, at SIGGRAPH, and they were enjoying being the talk of the town. It was clear that the industry was moving in the direction they started years ago. Sheridan will be on the AI stage at Disrupt in September.

However, the VFX community is not dismissing writer and actor strike warnings. They echo them and share existential concerns, albeit less so. An actor’s likeness or performance (or a writer’s imagination and voice) is their livelihood, and the threat of it being appropriated and automated is terrifying.

Automation threatens artists elsewhere in the production process, but it’s more of a people problem than a technology one. Many people I spoke to agreed that uninformed leaders’ bad decisions are the problem.

“AI looks so smart that you may defer your decision-making process to the machine,” Bertrand said. It gets scary when humans delegate their responsibilities to machines.

AI could transform the creative process by reducing repetitive tasks or allowing creators with smaller teams or budgets to compete with larger ones. Despite Hollywood’s technology, the strikes will only begin if AI takes over the creative process, as some executives seem willing to do.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews