Today, Meta has introduced new messaging restrictions on Facebook and Instagram for teenagers, ensuring that no one can message them.

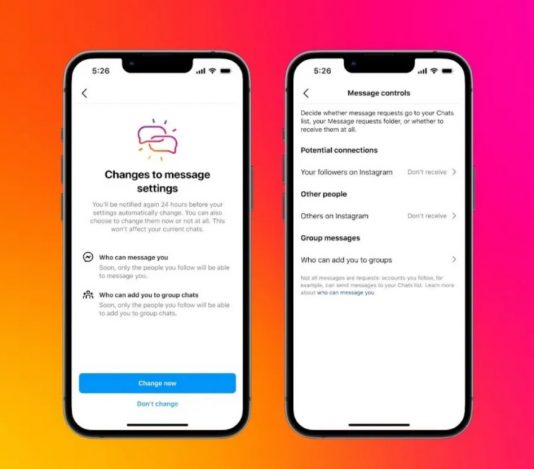

Currently, Instagram has implemented a policy where adults over the age of 18 are unable to send messages to teenagers who are not following them. The new limits will be applicable to all users below the age of 16, and in certain regions, below the age of 18, as a default setting. Meta has announced that it will be sending notifications to its current users.

On Messenger, users will only receive messages from individuals who are on their Facebook friends list or in their contacts.

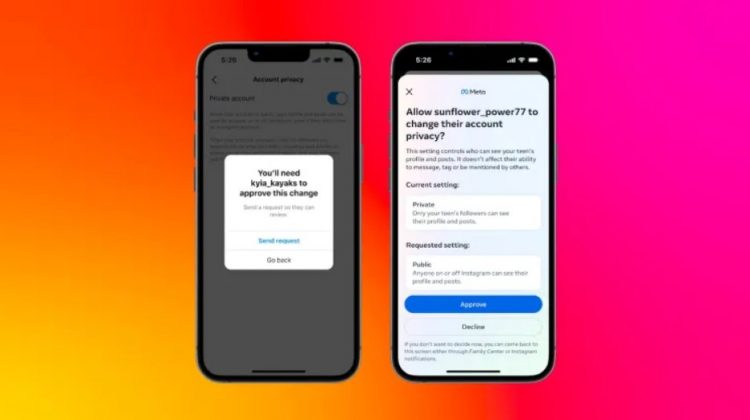

In addition, Meta is enhancing its parental controls by giving guardians the ability to approve or reject changes to default privacy settings made by teenagers. In the past, when teenagers adjusted these settings, parents would receive a notification, but they were unable to make any changes to them.

The company provided an illustration where guardians have the ability to block teen users if they attempt to make their account public from private, modify the Sensitive Content Control settings, or make changes to their DM controls.

In 2022, Meta introduced parental supervision tools for Instagram, providing guardians with insights into their teens’ usage.

The social media giant announced its intention to introduce a new feature aimed at safeguarding teenagers from encountering unsolicited and inappropriate images in their direct messages from individuals within their network. The company also mentioned that this feature will be effective in end-to-end encrypted chats, aiming to discourage teenagers from sharing such images.

Meta did not provide details on the measures it is taking to protect the privacy of teenagers while implementing these features. Furthermore, it failed to specify the criteria for determining what is deemed “inappropriate.”

In a recent update, Meta introduced new features aimed at preventing teenagers from accessing content related to self-harm or eating disorders on Facebook and Instagram.

EU regulators contacted Meta in the past month and asked for more details about the company’s efforts to prevent the sharing of self-generated child sexual abuse material (SG-CSAM).

Simultaneously, the company is dealing with a civil lawsuit in New Mexico state court. The lawsuit claims that Meta’s social network encourages sexual content among teenage users and facilitates the creation of accounts for predators. Over 40 US states filed a lawsuit in October in a federal court in California alleging that the company’s product design had a detrimental effect on kids’ mental health.

The company is scheduled to appear before the Senate on January 31 this year, alongside other social networks such as TikTok, Snap, Discord, and X (formerly Twitter), to address concerns regarding child safety.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews