All users who are under the age of 18 will have the nudity protections turned on by default, and older users are also encouraged to do so.

Instagram is about to release a new safety feature that blurs naked pictures in messages. This is to help protect kids on the app from abuse and scams that use them sexually.

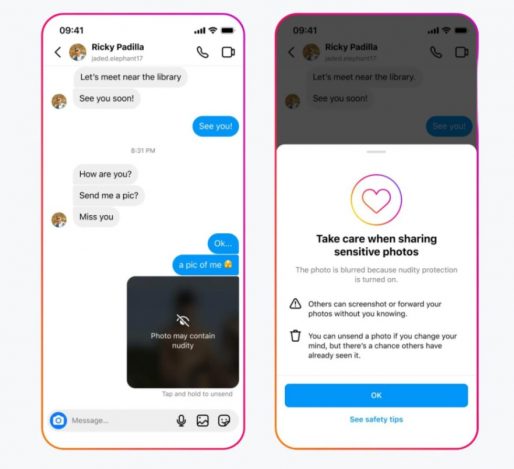

Meta announced the new feature on Thursday. It blurs photos that are thought to show naked people and tells users not to send them. By default, the feature will be on for teenage Instagram users, who can be identified by their birthday information. Adult users will also be reminded to turn it on via a message.

People have long said that social media sites like Facebook and Instagram are bad for kids because they hurt their mental health and body image, give abusive parents a place to be seen, and turn into a “marketplace for predators in search of children.”

The Wall Street Journal says that the new feature will be tested in the next few weeks. It will then be rolled out around the world over the next few months. Meta says that the feature uses machine learning on the device to check if a picture sent through Instagram’s direct messaging service shows someone who isn’t dressed. The company won’t see these pictures unless they’ve been reported.

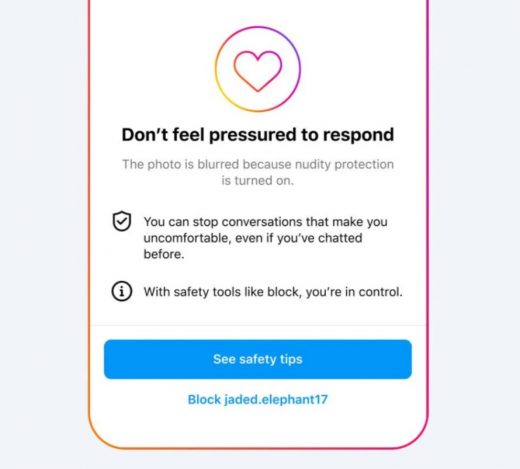

When the protection is turned on, Instagram users who receive naked photos will see a message telling them not to feel like they have to respond. They will also be given the option to block and report the sender. In its announcement, Meta said, “This feature is meant to protect people not only from seeing unwanted nudity in their DMs, but also from scammers who may send nude images to get people to send their own images back.”

Someone trying to direct message (DM) a naked person will also see a message warning them about the risks of sharing private photos. Someone trying to forward a naked picture they’ve received will also see a warning message.

Meta has already backed a tool in February 2023 to take sexually explicit pictures of minors offline and limited kids’ access to harmful topics like suicide, self-harm, and eating disorders earlier this year. This is their latest attempt to make their platforms safer for kids.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews