Meta announced today that it is giving money to a new nonprofit called Take It Down. Take It Down will work with the National Center for Missing and Exploited Children (NCMEC) to discourage people under the age of 18 from posting personal photos online.The internet application works like a Facebook endeavor to stop “revenge porn,” or non-consensual intimate photos.

Meta also announced new tools to make it harder for “suspect adults” to communicate with teens on Instagram.

The firm believes its new takedown procedure for non-consensual intimate pictures protects user privacy by not requiring young people to submit the images to Meta or another entity. Instead, the system will assign a unique hash value—a numerical code—to the image or video from the user’s device. This hash will be uploaded to NCMEC, allowing any company to find copies of those photographs and automatically take them down and prevent them from being shared in the future.

The first “revenge porn” system was attacked by security experts because it needed user uploads before the hash could be made.It now makes hashes locally, and the help materials say that “your photos will never leave your computer.”The same method appears to be used by this new Take It Down technique.

Meta’s Global Head of Safety, Antigone Davis, says in the statement that sharing a personal, intimate image can be intimidating and overwhelming, especially for young people. Sextortion can make it worse.

Meta says that this method can also be used by adults, like the young person’s parents or guardians, or by adults who are worried about photos they took when they were younger that they didn’t agree to.

The Take It Down website has links to other NCMEC resources, such as tools to search for your explicit images online, a CyberTipline to report online exploitation, and more.

The new technology will be used on Facebook and Instagram by Yubo, OnlyFans, and Pornhub, as well as Meta (MinGeek).Twitter and Snapchat are missing.

Almost 200 cases have been filed since.

NCMEC says the system debuted in December 2022, before this public disclosure, and has received over 200 cases. To reach kids, VCCP’s new PSA will air on their platforms.

If they know about the takedown option, people who are aware of or in possession of non-consensual photographs could use the system. Companies had to tell NCMEC about child sexual abuse material (CSAM), but they had to set up their own ways to find it.CSAM spreads across platforms because federal law does not require them to search for this imagery. Facebook, with 2.96 billion monthly users, is a big part of this problem. EARN IT and other bills to close this loophole have failed. Critics said that law could harm freedom of speech and consumer privacy.

Yet, the lack of legislation has forced platforms like Meta to self-regulate how they handle this and other content. With Congress failing to enact new internet-era regulations, legal concerns about big tech’s responsibility for platform content have reached the Supreme Court.The justices are thinking about Section 230 of the Communications Decency Act. This part of the law was made in the early days of the internet to protect websites from being held responsible for what their users post.If these decades-old restrictions should be lifted or thrown out, it will depend on how Twitter and YouTube handle their cases and how their recommendation systems work.Though unrelated to CSAM, they demonstrate the U.S. platform regulation system’s flaws.

Meta has been making up its own algorithm choice, design, recommendation technology, and end-user protection policies without legal guidance.

Meta has started making new teen accounts private by default and applying its strictest settings. It has also started rolling out safety features and parental controls in preparation for future restrictions.These upgrades restricted adult users from contacting kids they didn’t know and warned teens of suspect adult activity, such as sending a lot of friend requests to teens.

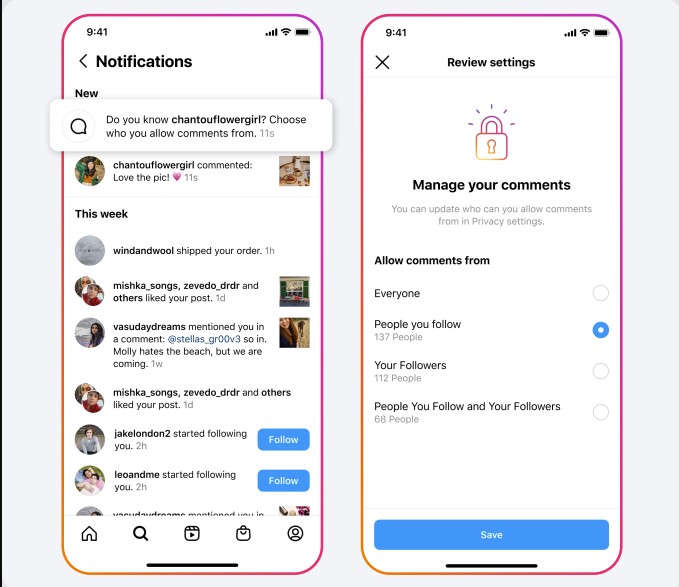

Today, Meta said that suspicious adults will no longer be able to view teen accounts while going through a post’s likes or following list. The teen will get a message to look at and get rid of a suspicious adult follower on Instagram. It will also remind kids to check and restrict their privacy settings when someone comments on their posts, tags or mentions them, or includes them in Reels remixes or guides.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews