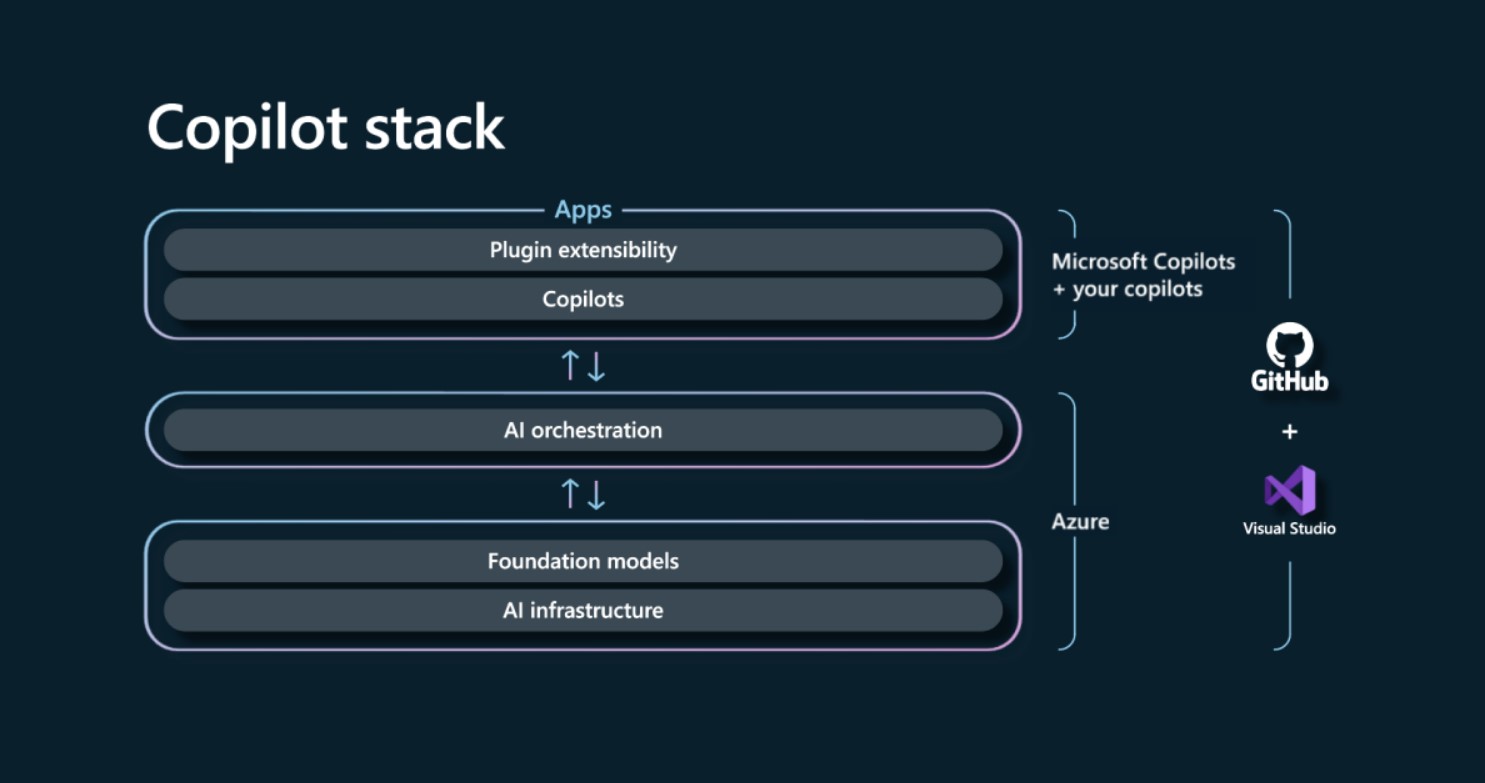

Microsoft wants companies to build AI-powered “copilots” using Azure tools and OpenAI machine learning models.

At its annual Build conference, Microsoft launched Azure AI Studio, a new capability within the Azure OpenAI Service that lets customers combine a model like OpenAI’s ChatGPT or GPT-4 with their own data—text or images—to build a chat assistant or other app that “reasons over” the private data. Azure OpenAI Service, Microsoft’s fully managed, enterprise-focused product, gives businesses access to OpenAI’s technologies with governance features.

Microsoft defines a “copilot” as a chatbot app that uses AI, usually text- or image-generating AI, to help with sales pitches or presentation images. Bing Chat is one example. Unlike Azure AI Studio copilots, its AI-powered copilots can’t use a company’s proprietary data.

In Azure AI Studio, developers can securely ground Azure OpenAI Service models on their data without seeing or training the model. Microsoft AI platform CVP John Montgomery told. “Building their own copilots accelerates our customers’

Azure AI Studio starts copilot-building with a generative AI model like GPT-4. Next, give the copilot a “meta-prompt” to explain their role.

Azure AI Studio-created AI copilots can store user conversations in the cloud to respond appropriately. Plug-ins give copilots third-party data and services.

Microsoft believes Azure AI Studio’s value is letting customers use OpenAI’s models on their own data in compliance with organizational policies and access rights without compromising security, data policies, or document ranking. Customers can integrate structured, unstructured, or semi-structured data from their organization or external sources.

Microsoft is promoting Azure AI Studio-built customized models. Microsoft says the Azure OpenAI Service serves more than 4,500 companies, including Coursera, Grammarly, Volvo, and IKEA. It could be a lucrative revenue stream.

Azure OpenAI Service upgrades

Microsoft is increasing high-volume customer capacity to encourage Azure OpenAI Service adoption.

Azure OpenAI Service customers can now reserve and deploy model processing capacity monthly or annually using the Provisioned Throughput SKU. Customers can buy PTUs to deploy OpenAI models like GPT-3.5 Turbo or GPT-4 with reserved processing capacity during the commitment period.

OpenAI previously provided ChatGPT API capacity. The Provisioned Throughput SKU greatly expands on this and targets enterprises.

“With reserved processing capacity, customers can expect consistent latency and throughput for workloads with consistent characteristics such as prompt size, completion size, and number of concurrent API requests,” a Microsoft spokesperson told.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews