Building on shifting sands must be how writing a report on the state of AI feels: When you press “publish,” the entire industry has already transformed beneath your feet. In spite of this, Stanford’s 386-page attempt to describe this complicated and rapidly evolving field nonetheless contains significant trends and takeaways.

In order to gather data and make projections, the Institute for Human-Centered Artificial Intelligence’s AI Index collaborated with specialists from both academia and business. This may not be the most innovative approach to AI as a yearly endeavor (and by the magnitude of it, you can bet they’re already hard at work drawing out the next one), but these recurring broad surveys are crucial to maintain one’s finger on the pulse of industry.

The report this year looks at policy in a hundred new nations and offers “new analysis on foundation models, including their geopolitics and training costs, the environmental impact of AI systems, K-12 AI education, and public opinion trends in AI.”

Let’s list the key points in bullet form:

- AI development has flipped over the last decade from being academia-led to being industry-led by a large margin, and this shows no sign of changing.

- It’s becoming difficult to test models on traditional benchmarks, and a new paradigm may be needed here.

- The energy footprint of AI training and use is becoming considerable, but we have yet to see how it may add efficiencies elsewhere.

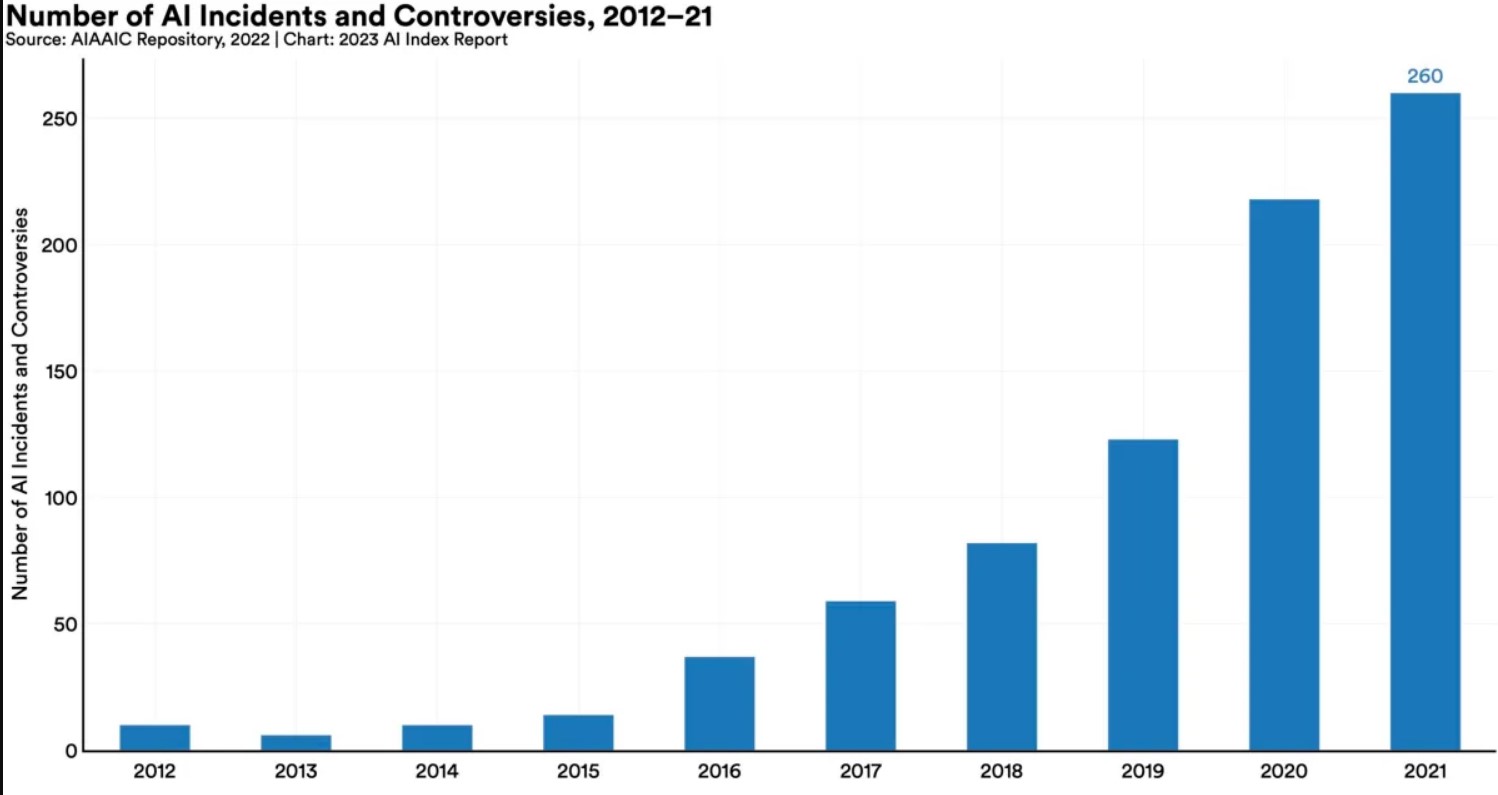

- The number of “AI incidents and controversies” has increased by a factor of 26 since 2012, which actually seems a bit low.

- AI-related skills and job postings are increasing, but not as fast as you’d think.

- Policymakers, however, are falling over themselves trying to write a definitive AI bill, a fool’s errand if there ever was one.

- Investment has temporarily stalled, but that’s after an astronomic increase over the last decade.

- More than 70% of Chinese, Saudi, and Indian respondents felt AI had more benefits than drawbacks. Americans? 35%.

The study, however, is quite readable and nontechnical and covers a wide range of issues and subtopics in detail. All 386 pages of the analysis will only be read by the dedicated, but actually, anyone who is motivated could.

Let’s take a closer look at Chapter 3, Technical AI Ethics.

Insofar as we can define and evaluate models for bias and toxicity, it is evident that “unfiltered” models are much, much simpler to lead into dangerous area. However, bias and toxicity are difficult to convert to metrics. This problem can be improved through instruction tuning, which entails adding an additional layer of preparation (such as a concealed prompt) or processing the model’s output through a second mediator model, although it is far from ideal.

This diagram best demonstrates the rise in “AI incidents and controversies” mentioned in the bullets:

As you can see, the trend is upward, and these figures were obtained before ChatGPT and other significant language models were widely used, not to mention the significant advancement in image generators. The 26x rise is only the beginning, you can be certain of that.

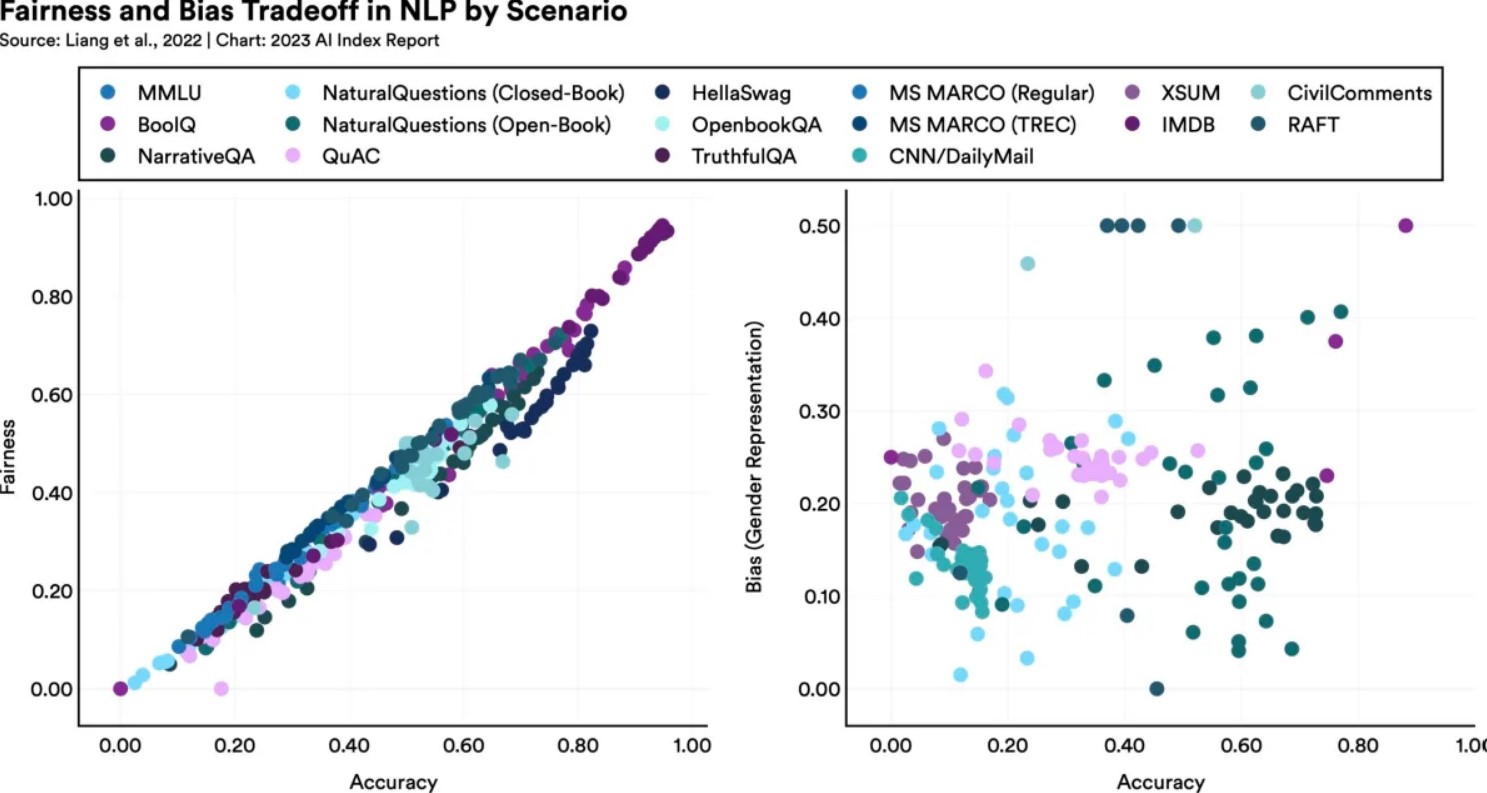

As this figure illustrates, improving the fairness or objectivity of a model may have unanticipated effects on other metrics.

Language models that do better on particular fairness metrics likely to have worse gender bias, the paper states. Why? It’s difficult to say, but it only goes to demonstrate that optimization is more complicated than most people think. Because we don’t fully understand how these enormous models operate, there isn’t a straightforward way to improve them.

One of those fields where AI seems like a perfect fit is fact-checking: having indexed a large portion of the web, it can analyze claims and return a confidence that they are supported by reliable sources, etc. This is absolutely not the case. The worry is not so much that AI will be unreliable fact-checkers but rather that they will themselves develop into potent propagators of compelling false information. AI is actually exceptionally lousy at evaluating factuality. Even though numerous research and datasets have been developed to evaluate and enhance AI fact-checking, we are still essentially where we were when we first started.

Fortunately, there has been a significant increase in interest in this area for the apparent reason that the entire business would suffer if customers didn’t feel confident in AI. At the ACM Conference on Fairness, Accountability, and Transparency, there has been a significant rise in submissions, and at NeurIPS, topics like fairness, privacy, and interpretability are receiving increased attention and stage time.

There is a lot of information missing from these highlights of highlights. However, the HAI team did a fantastic job of arranging the content, so after reading the high-level material here, you may download the complete paper and delve deeper into any subject that interests you.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews