According to Nvidia, a computer chip with more than 400 billion transistors has the capability to train artificial intelligence models more quickly and with reduced energy consumption. However, the exact cost of this device has not been announced yet.

The latest technological advancement by Nvidia involves the introduction of a very potent “superchip” designed for the purpose of training artificial intelligence models. The US computer business, which has lately seen a significant increase in value to become the third-largest corporation globally, has not yet announced the pricing of its new chips. However, analysts anticipate a substantial cost that will limit their availability to a select few organizations.

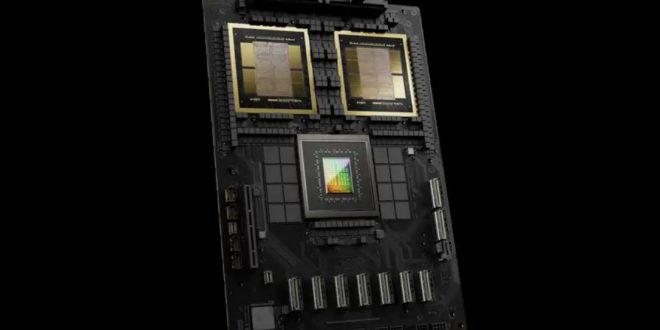

On March 18, Nvidia CEO Jensen Huang made an announcement about the processors at a press conference held in San Jose, California. He showcased the company’s latest Blackwell B200 graphics processing units (GPUs), which have 208 billion transistors, the little switches that form the core of contemporary computer gadgets. In contrast, Nvidia’s current-generation Hopper processors include 80 billion transistors. Additionally, the GB200 Grace Blackwell Superchip was unveiled, which integrates two B200 processors.

According to Huang, Blackwell is poised to become an exceptional system for generative artificial intelligence. “In the future, data centers will be regarded as artificial intelligence factories.”

Graphics Processing Units (GPUs) have become very desirable hardware for organizations aiming to train extensive AI models. In 2023, when there were shortages of AI chips, Elon Musk remarked that GPUs were more difficult to get than pharmaceuticals. Additionally, several university academics who did not have access to GPUs expressed their dissatisfaction with their performance.

According to Nvidia, the Blackwell chips have the capability to achieve a performance enhancement of 30 times when executing generative AI services that rely on extensive language models like OpenAI’s GPT-4 in comparison to Hopper GPUs. Furthermore, these chips are said to use 25 times less energy.

According to the information provided, GPT-4 necessitated an estimated 8000 Hopper GPUs and a power consumption of 15 megawatts for a training period of 90 days. In contrast, the same AI training could be accomplished with just 2000 Blackwell GPUs, using a little over 4 megawatts of electricity.

The business has not yet announced the pricing of the Blackwell GPUs, but it is quite probable that the cost will be exorbitant, considering that the Hopper GPUs now range from $20,000 to $40,000 apiece. According to Sasha Luccioni of Hugging Face, a startup specializing in the development of AI code and datasets, the emphasis on creating more advanced and costly chips will limit their availability to a small number of organizations and nations. “In addition to the environmental consequences of this already highly energy-consuming technology, this is a significant moment for the AI community, akin to Marie Antoinette’s ‘let them eat cake’ moment,” she states.

The projected increase in power demand resulting from the construction of data centers, mostly fueled by the rapid growth of generative AI, is anticipated to double by the year 2026, aligning with the current energy consumption levels seen in Japan. If the data centers that enable AI training continue to depend on fossil fuel power plants, there is a potential for significant increases in carbon emissions.

The increasing demand for GPUs on a global scale has presented Nvidia with geopolitical challenges due to the escalating tensions and strategic rivalry between the United States and China. The implementation of export limitations by the US government on sophisticated chip technology is aimed at impeding China’s AI research endeavors. This measure is deemed crucial for safeguarding US national security. Consequently, Nvidia has been compelled to produce fewer potent iterations of their chips to cater to the Chinese clientele.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews