The Google AI research and development lab DeepMind thinks that finding innovative solutions to difficult geometric problems could be the key to building AI systems with greater capability.

In light of this, DeepMind has introduced AlphaGeometry, a system that the research group claims can answer twice as many geometry questions as the typical IMT Olympiad winner. This morning, the source code for AlphaGeometry was made public. Its ability to answer 25 Olympiad geometry questions in the regular time limit surpasses that of the previous state-of-the-art system, which could only manage 10.

This morning, Google AI research scientists Trieu Trinh and Thang Luong said in a blog post, “Solving Olympiad-level geometry problems is an important milestone in developing deep mathematical reasoning on the path toward more advanced and general AI systems.” They published a blog post. “[We] are hopeful that… AlphaGeometry contributes to the expansion of opportunities in the fields of mathematics, science, and artificial intelligence.”

Why is geometry held in such high esteem? Mathematical theorems, like the Pythagorean theorem, need reasoning and the capacity to choose from a set of alternatives in order to be proven, according to DeepMind. In the future, general-purpose AI systems may find this problem-solving technique valuable, assuming DeepMind is correct.

“Demonstrating that a particular conjecture is true or false stretches the abilities of even the most advanced AI systems today,” said the press materials supplied by DeepMind. The ability to prove mathematical theorems is a significant step toward that objective since it demonstrates proficiency in thinking logically and the capacity to acquire new information.

However, there are special difficulties in teaching an AI system to resolve geometric issues.

The difficulty in converting proofs to a machine-readable format is one reason why practical geometry training data is scarce. Even though they are great at finding patterns and correlations in data, many state-of-the-art generative AI models can’t prove anything by reasoning logically.

In the process of developing AlphaGeometry, the research team combined a “neural language” model, which is a model that is structurally similar to ChatGPT, with a “symbolic deduction engine,” which is an engine that makes use of rules (such as mathematical rules) to infer solutions to problems. There is a possibility that symbolic engines are rigid and sluggish, particularly when working with data sets that are either huge or intricate. DeepMind, on the other hand, was able to alleviate these concerns by using the neural model to “guide” the deduction engine through the many alternative solutions to geometry problems that were presented.

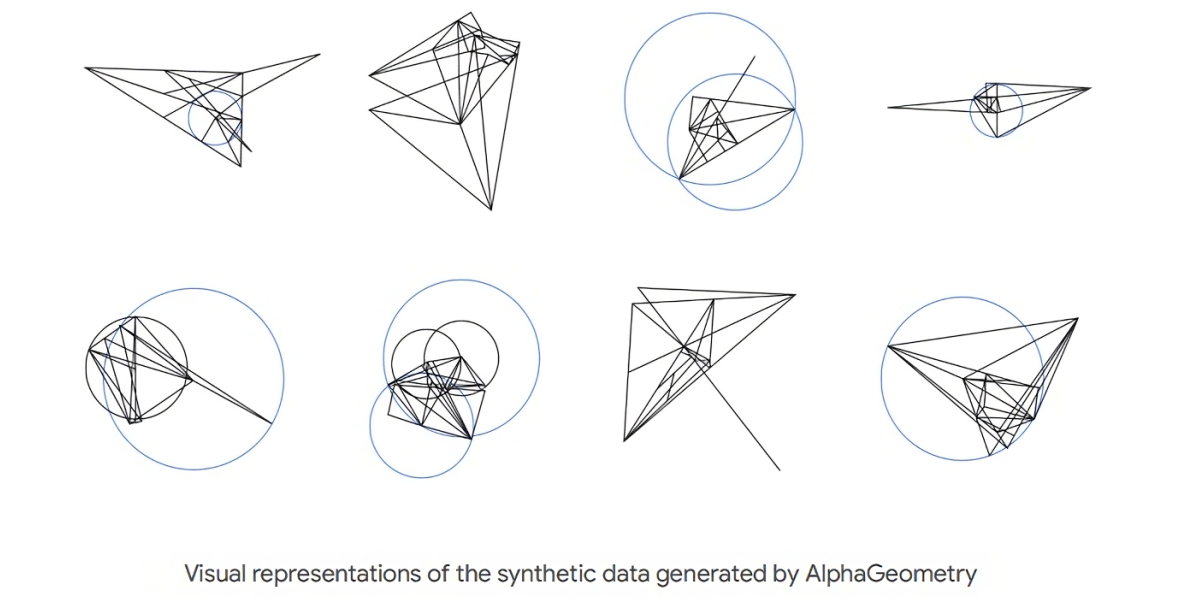

Instead of using training data, DeepMind developed its own synthetic data, which resulted in the generation of one hundred million “synthetic theorems” and proofs of varied degrees of difficulty. In the next step, the laboratory trained AlphaGeometry from scratch using the synthetic data and then tested it using geometry questions from the Olympiad.

The geometry questions that are used in the Olympiad are based on diagrams, and in order to answer them, “constructs” like points, lines, and circles must be added to the diagram’s structure. After applying it to these issues, the neural model of AlphaGeometry makes predictions about which constructs could be good to add. The symbolic engine of AlphaGeometry then makes inferences about the diagrams using these predictions in order to find solutions that are similar.

“With so many examples of how these constructs led to proofs, AlphaGeometry’s language model is able to make good suggestions for new constructs when presented with Olympiad geometry problems,” Trinh and Luong write. “This is because the language model is able to make use of those examples.” The first system generates ideas quickly and in an ‘intuitive’ manner, whereas the second system is more careful and logical in its decision-making.

The results of AlphaGeometry’s problem solving, which were published in a study in the journal Nature this week, are likely to fuel the debate that has been going on for a long time about whether artificial intelligence systems should be built on symbol manipulation, which is the process of manipulating symbols that represent knowledge by using rules, or neural networks, which are ostensibly more brain-like.

The proponents of the neural network method contend that intelligent behavior, ranging from voice recognition to picture production, may arise from absolutely nothing more than enormous quantities of data and computation. Neural networks, on the other hand, attempt to solve problems through statistical approximation and by learning from examples. This is in contrast to symbolic systems, which handle problems by establishing sets of symbol-manipulating rules that are devoted to certain activities (such as editing a line in word processing software).

Neural networks are the foundation upon which sophisticated artificial intelligence systems such as OpenAI’s DALL-E 3 and GPT-4 are built. These advocates of symbolic AI say that they are not the end-all be-all; symbolic AI may be better positioned to effectively encode the world’s information, reason their way through complicated situations, and “explain” how they arrived at an answer. However, they assert that they are not the end-all-be-all.

AlphaGeometry is a hybrid symbolic-neural network system that is comparable to DeepMind’s AlphaFold 2 and AlphaGo. It may be possible to show that the combination of the two techniques, symbol manipulation and neural networks, seems to be the most effective way to go ahead in the quest for generalizable artificial intelligence. To be sure,.

According to what Trinh and Luong have written, “Our long-term goal remains to build artificial intelligence systems that can generalize across mathematical fields, developing the sophisticated problem-solving and reasoning that general AI systems will depend on while simultaneously expanding the frontiers of human knowledge.” This technique has the potential to influence the manner in which artificial intelligence systems of the future acquire new information, not just in mathematics but also in other areas.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews