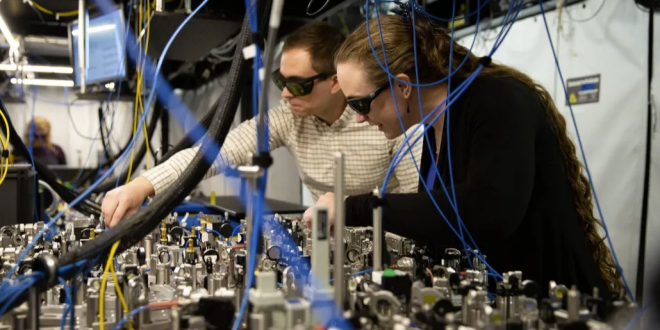

Microsoft and Quantinuum have made a significant advancement in quantum error correction. With the utilization of Quantinuum’s ion-trap hardware and Microsoft’s latest qubit-virtualization system, the team achieved an impressive feat of conducting over 14,000 experiments flawlessly. With the implementation of this innovative system, the team was able to effortlessly examine and rectify any errors encountered by the logical qubits, all while preserving their integrity.

According to the two companies, this advancement signifies a significant step forward in the field of quantum computing, surpassing the limitations of previous NISQ computers. “Noisy” due to the sensitivity of quantum systems to even the slightest environmental changes, causing them to behave unpredictably (or “decohere”). And “intermediate scale” refers to the current limitations of quantum computers, which can only handle slightly over a thousand qubits at most. Qubits are the building blocks of quantum computing, similar to bits in traditional computers. However, unlike bits, qubits can exist in multiple states simultaneously and only assume a definite value when observed. This unique property holds the promise of unlocking unprecedented computational capabilities in the realm of quantum computing.

No matter the number of qubits, the limited time available to run a basic algorithm can render the system too noisy to produce any useful result.

By employing a range of methodologies, the team successfully conducted numerous experiments with an exceptional level of precision. That required extensive preparation and careful selection of systems that appeared to be in good condition for a successful operation. Nevertheless, this represents a significant advancement compared to the industry’s recent state.

This is a positive development for quantum computing. There are still numerous challenges that need to be addressed (and it is crucial to replicate these results), but in theory, a computer equipped with 100 of these logical qubits could already prove valuable in tackling certain problems. According to Microsoft, a machine with 1,000 qubits could provide a significant commercial advantage.

Using Quantinuum’s H2 trapped-ion processor, the team successfully merged 30 physical qubits into four exceptionally dependable logical qubits. Utilizing the principles of physics, the process of encoding multiple physical qubits into a single logical qubit serves as a valuable safeguard against potential errors in the system. Through the process of entanglement, the physical qubits are intricately connected, allowing for the detection and correction of errors in each individual qubit.

Understanding and addressing error correction has been a persistent challenge for the industry. Naturally, the goal is to minimize noise and maximize the quality of physical qubits. However, without advanced error correction techniques, there is no escape from the limitations of the NISQ era. These systems will inevitably experience decoherence, hindering their long-term viability.

According to Dennis Tom, the general manager for Azure Quantum, and Krysta Svore, the VP of Advanced Quantum Development at Microsoft, it is pointless to simply add more physical qubits with a high error rate without improving that error rate. They emphasize that doing so would only lead to a larger quantum computer that is not any more powerful than before. On the other hand, we can make powerful, fault-tolerant quantum computers that can do longer and more complex calculations if we use high-quality physical qubits along with a special orchestration and diagnosis system to make virtual qubits possible.

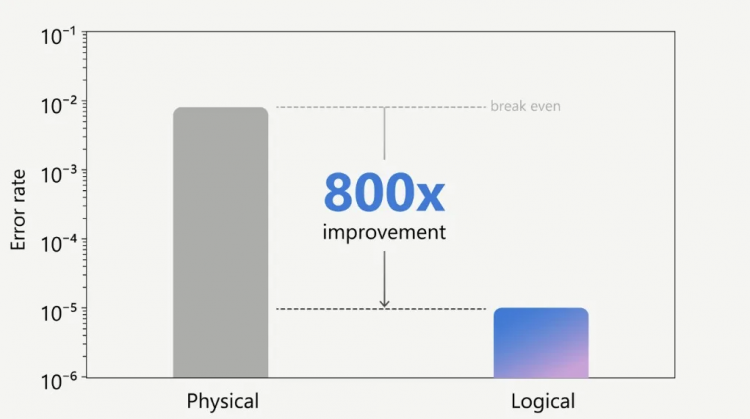

A few years ago, logical qubits began to surpass physical qubits in terms of performance. Now, Microsoft and Quantinuum claim that their new hardware and software system showcases a significant disparity between physical and logical error rates, surpassing the use of only physical qubits by a factor of 800.

According to the researchers, in order to progress beyond NISQ, it is crucial to have a significant difference between the error rates of logical and physical qubits. Additionally, the ability to fix errors in individual circuits and create entanglement between at least two logical qubits is essential. If these results are confirmed, then the team has accomplished all three goals, indicating that we have entered a stable era—the era of resilient quantum computing.

It appears that the team’s significant achievement lies in their capability to conduct “active syndrome extraction”—the a ability to identify and rectify errors without causing damage to the logical qubit.

“This achievement represents an important advancement in the ability to fix errors without compromising the integrity of the logical qubits. It is a significant milestone in the field of quantum error correction,” Tom and Svore explain. “With our qubit-virtualization system, we were able to show an important part of reliable quantum computing, which led to a low rate of logical errors across many rounds of syndrome extraction.”

Now, it is the responsibility of the rest of the quantum community to reproduce these findings and incorporate comparable error correction systems. It’s only a matter of time, though.

“Today’s results represent a significant milestone and demonstrate the ongoing progress of this collaboration in advancing the quantum ecosystem,” stated Ilyas Khan, the founder and chief product officer of Quantinuum. “Microsoft’s cutting-edge error correction, combined with the immense power of a quantum computer and a comprehensive approach, fills us with anticipation for the future of quantum applications. We eagerly await the benefits our customers and partners will derive from our solutions, particularly as we progress towards large-scale quantum processors.”

For more information, you can access the technical paper here.

Tech Gadget Central Latest Tech News and Reviews

Tech Gadget Central Latest Tech News and Reviews